What is web scraping?

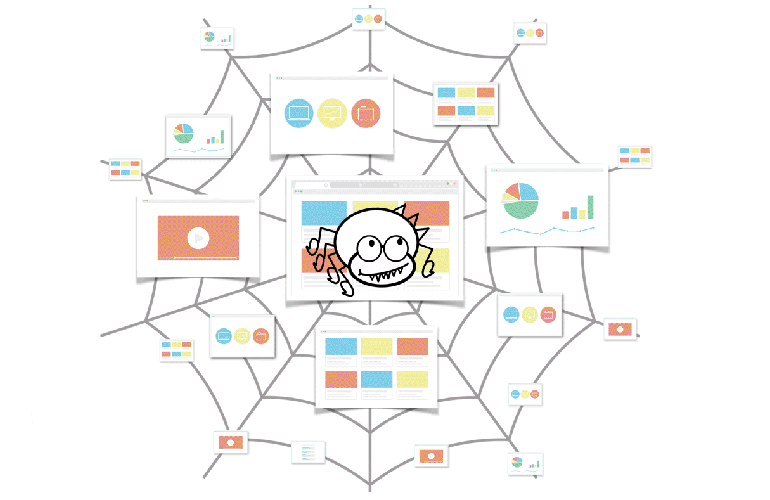

Web scraping is the process of collecting structured web data in an automated fashion. It's also called web data extraction. Web scraping has existed for a long time and, in its good form, it's a key underpinning of the internet. Think about saving product images, attributes, company data, price monitoring (hotel prices, rental space, products prices etc), price intelligence, news monitoring and more.

web data extraction is used by people and businesses who want to make use of the vast amount of publicly available web data

If you've ever copy and pasted information from a website, you've performed the same function as any web scraper, only on a microscopic, manual scale

is it Illegal to Scrape a Website?

Web scraping and crawling aren't illegal by themselves. After all, you could scrape or crawl your own website, for test purposes. Big companies use web scrapers for their own gain but also don't want others to use bots against them. There are numrous court cases about webscraling.

- In 2001 , a travel agency sued a competitor who had "scraped" its prices from its Web site to help the rival set its own prices. The judge ruled that the fact that this scraping was not welcomed by the site's owner was not sufficient to make it "unauthorized access" for the purpose of federal hacking laws.

- In the summer of 2017, LinkedIn sued hiQ Labs, a San Francisco-based startup. hiQ was scraping publicly available LinkedIn profiles to offer clients, according to its website, "a crystal ball that helps you determine skills gaps or turnover risks months ahead of time." You might find it unsettling to think that your public LinkedIn profile could be used against you by your employer. Yet a judge on Aug. 14, 2017 decided this is okay. Judge Edward Chen of the U.S. District Court in San Francisco agreed with hiQ's claim in a lawsuit that Microsoft-owned LinkedIn violated antitrust laws when it blocked the startup from accessing such data. He ordered LinkedIn to remove the barriers within 24 hours. LinkedIn has filed to appeal.

- Andrew Auernheimer was convicted of hacking based on the act of web scraping. Although the data was unprotected and publically available via AT&T's website, the fact that he wrote web scrapers to harvest that data in mass amounted to "brute force attack". He did not have to consent to terms of service to deploy his bots and conduct the web scraping. The data was not available for purchase. It wasn't behind a login. He did not even financially gain from the aggregation of the data. Most importantly, it was buggy programing by AT&T that exposed this information in the first place. Yet Andrew was at fault. This isn't just a civil suit anymore. This charge is a felony violation that is on par with hacking or denial of service attacks and carries up to a 15-year sentence for each charge.

Conclusion

As the courts try to further decide the legality of scraping, companies are still having their data stolen and the business logic of their websites abused. Instead of looking to the law to eventually solve this technology problem, it's time to start solving it with anti-bot and anti-scraping technology today.